Overview

Arceus is a marketplace platform that lets people rent out their computer's processing power to help train AI models. The platform connects students, researchers, and developers who need computing resources with people who have powerful computers sitting idle, creating an Uber-like network of shared computing power.

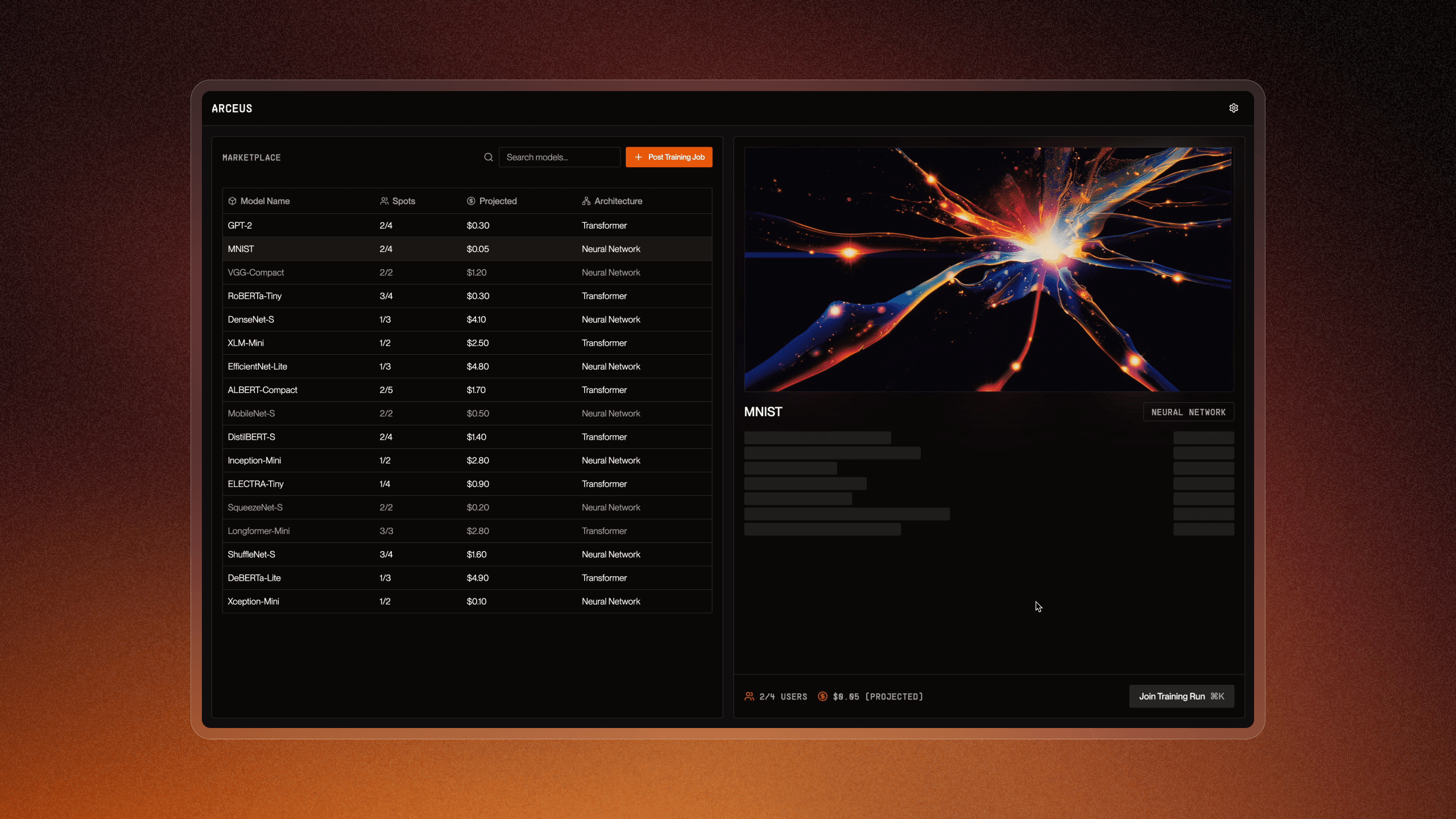

Marketplace

Developers can post their model training jobs to Arceus's marketplace, specifying details like model size and compute requirements, which then get matched with available computing devices in the network. Device owners can opt-in to share their compute power during idle times, with Arceus handling all the technical complexity of distributing the training workload and keeping track of contributions.

The platform uses dynamic pricing based on demand and computing power, with device owners earning around $0.30 per hour while keeping the overall costs cheaper than traditional cloud services for developers.

Model Parallelism

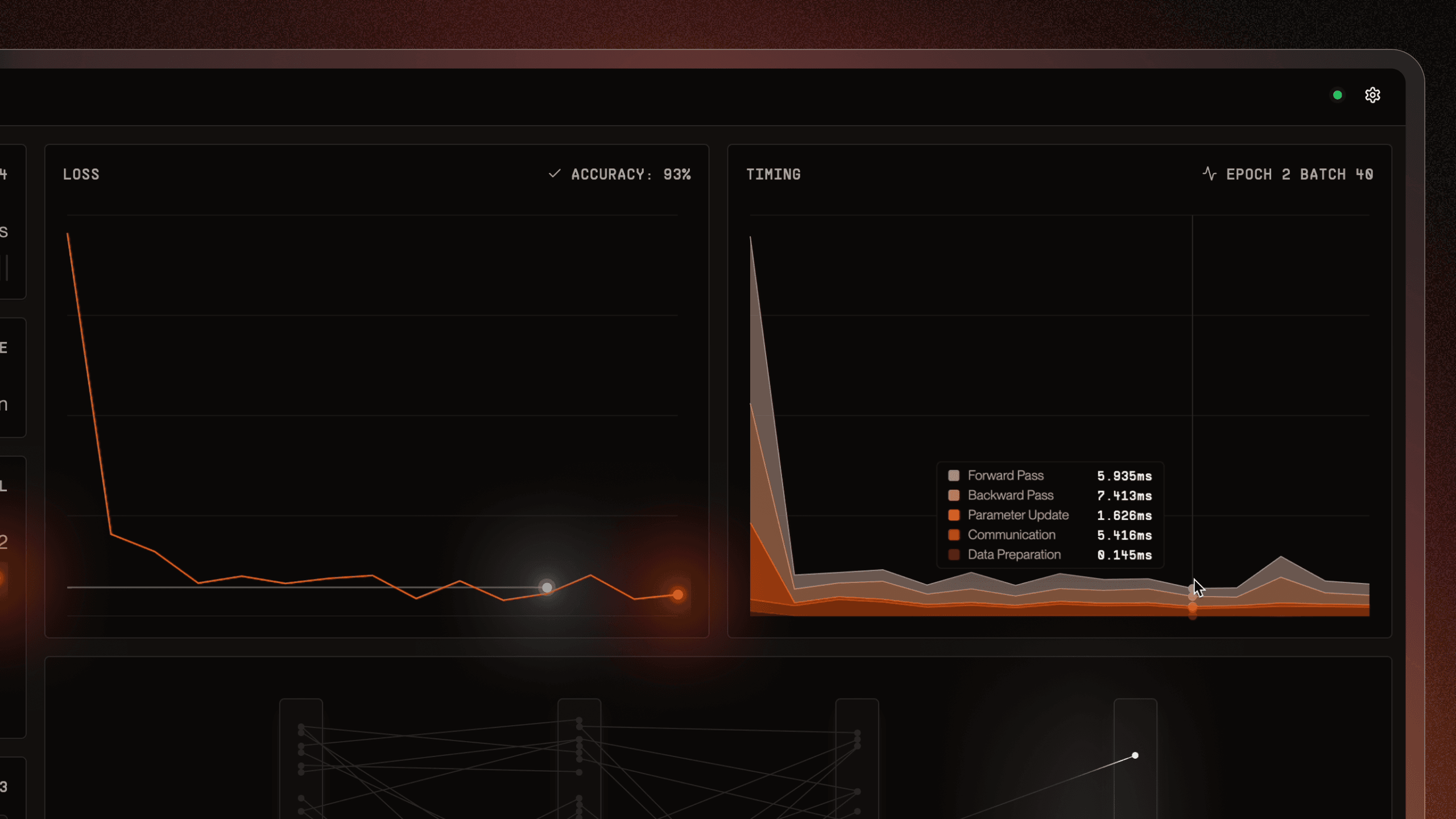

Model parallelism divides a large AI model into smaller pieces, with each piece running on a different device. This approach means each device only needs to store and process a portion of the model's parameters, making it possible to train models that would be too large for any single consumer device.

Data parallelism, where each device runs a copy of the entire model on different chunks of training data, wouldn't work well for Arceus's use case because consumer devices often lack enough memory to store full modern AI models. Model parallelism trades some speed for much lower memory requirements per device, which is crucial when working with a network of consumer hardware rather than data center GPUs.

Design

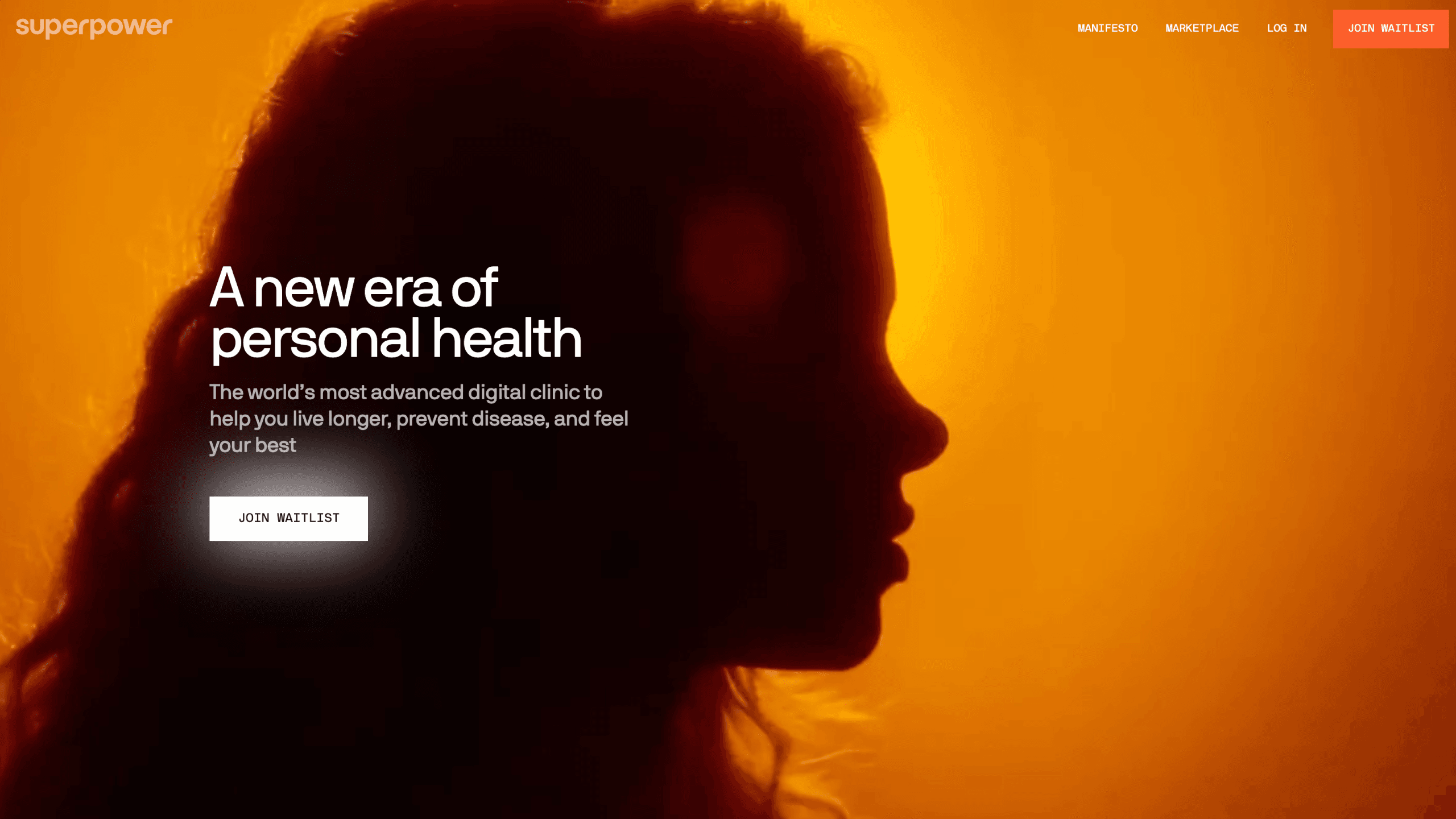

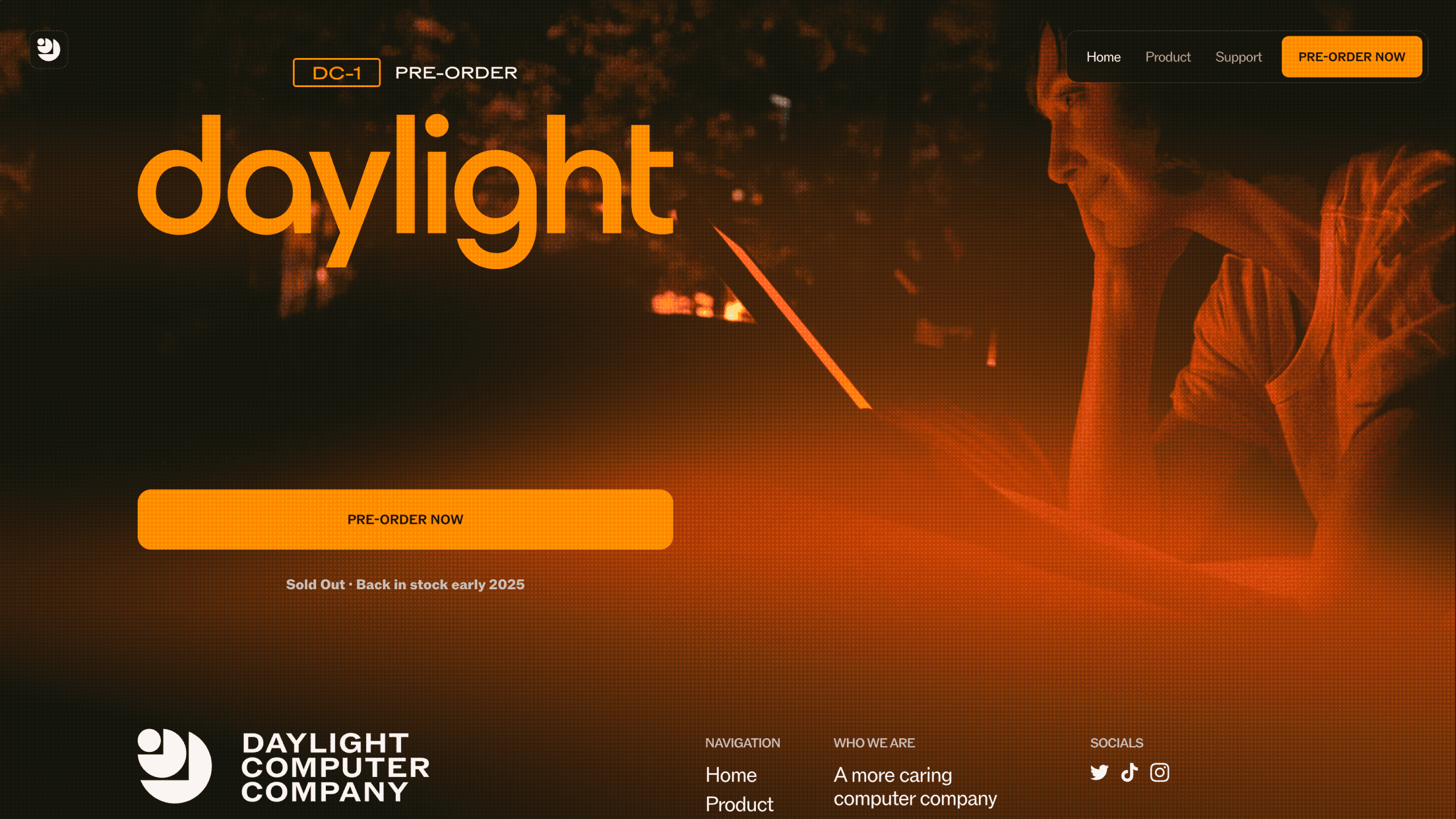

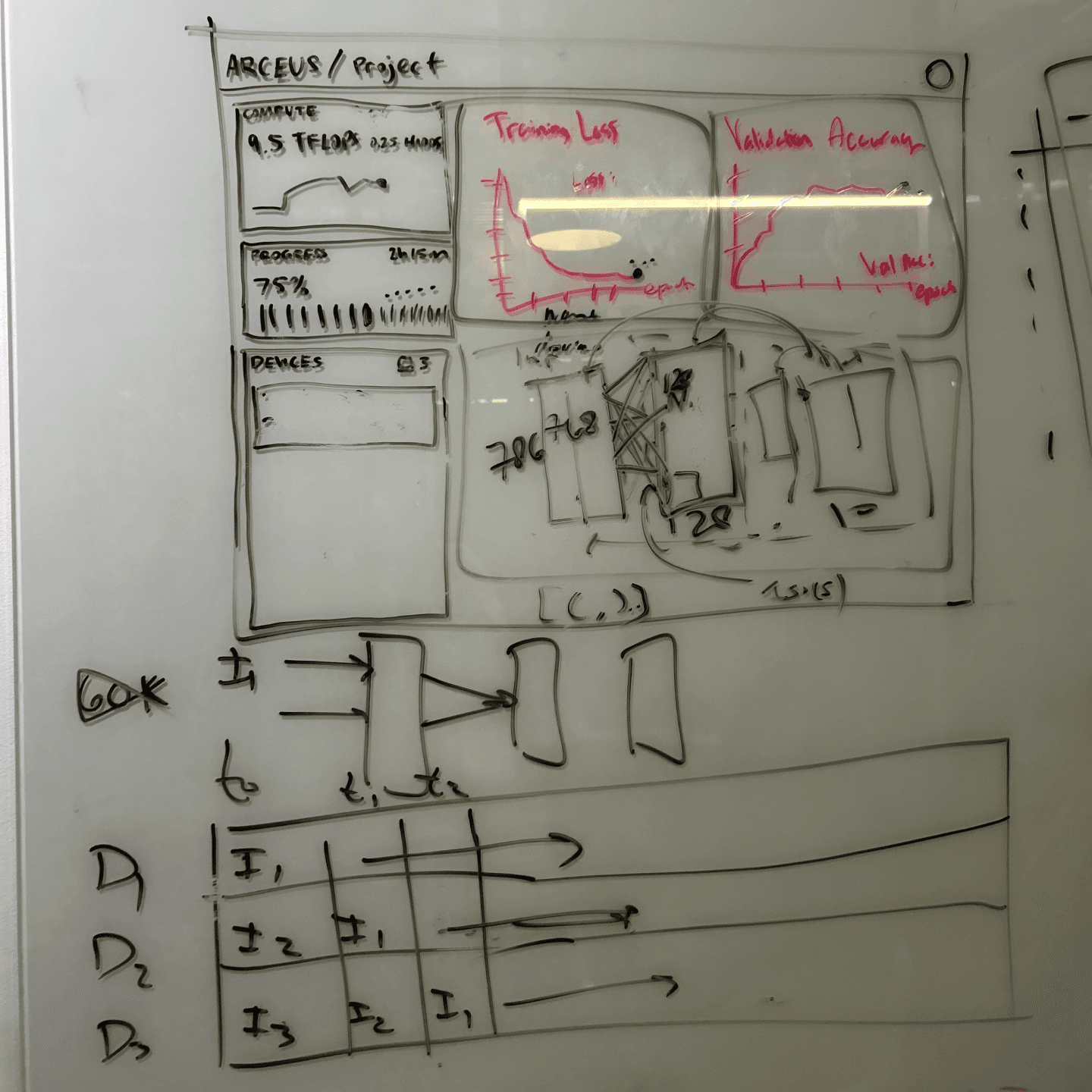

Creating the interface for Arceus was an fun exploration, balancing the info-dense live training dashboard with more stylistic elements and animations.

I took inspiration from the branding of Superpower and Daylight, with emphasis on the striking orange accents. In the model training animations, I also used the LCD screen effect from the Daylight footer.

It's made Next.js, Tailwind, Shadcn UI, and Recharts. The font combination is Supply Mono and Montreal from Pangram Pangram. The neural network and transformer animations are both made in pure CSS! Check out a few design highlights:

To be honest, the neural network animation is functionally useless and provides no information. I just had the idea of visualizing the neuron activations to fill empty space, and it led to a rabbit hole of CSS animations, trigonometry, and lots of timing struggles. The outcome is pretty cool though!

Results

Here's the complete platform demo video! If you're interested in a more in-depth breakdown of the technical details, check out Rajan's writeup.

We achieved really good performance training across a network, first with traditional neural networks and then with transformers. We also demoed to over 200 people including C-suite members from Ollama and Ramp (thanks to Calvin for the mentorship). We also built this project as a part of Launchpoint at UWaterloo.

Arceus democratizes AI training the same way OpenAI and Anthropic have done for inference. They are tapping into a massive underserved market with potential for a super strong network effect between customers.

Jeff Morgan, CEO @ Ollama

Some photos of the process:

And yes, it's named after the Pokémon.